Data and Goliath

I recommend Bruce Schneier’s new book Data and Goliath: The Hidden Battles to Collect Your Data and Control Your World to everyone.

Schneier, as you probably know, is a security expert. A real one, a good one, and a thoughtful one. He wrote the book on implementing cryptography in software, he design the playing card encryption method used in Neal Stephenson’s Cryptonomicon, he was helped reporters understand the Snowden documents.

This is his post-Snowden book, on everything that’s known about how we’re being monitored every second of our lives, by whom, why this is a very serious problem, and what we can do about it. His three section headings set it out clearly: The World We’re Creating, What’s at Stake, and What to Do About It. In each section he explains things clearly and understandably without requiring any major technical knowledge. Often there isn’t time to get into technical details, anyway: we are monitored so minutely online, and what the NSA and other spy agencies do is so staggeringly intrusive, that the briefest description of one technique or system is all that’s needed to get the point across before moving on to another.

Data and Goliath is the book I’ve been waiting for, the one that lays it all out and brings all of the recent discoveries and revelations together. It has much that is new, such as discussions of why privacy is necessary so that people have the freedom to break some laws that ultimately lead to societal change (homosexuality is one of his examples), and good arguments against the “but I have nothing to hide, I don’t care” idiocy of people who ignorantly give up all their privacy. (Glenn Greenwald’s No Place to Hide: Edward Snowden, the NSA and the U.S. Surveillance State has more good arguments, such as: you may not care, but millions of people around the world trying to make things better do, and they’re in danger of being arrested and beaten just for speaking their mind).

He ends with what we can do, from the small scale (paying cash, browser privacy extensions, leaving cell phones at home) to the large (major political action). Here’s the final paragraph of the penultimate chapter:

There is strength in numbers, and if the public outcry grows, governments and corporations will be forced to respond. We are trying to prevent an authoritarian government like the one portrayed in Orwell’s Nineteen Eighty-Four, and a corporate-ruled state like the ones portrayed in countless dystopian cyberpunk science fiction novels. We are nowhere near either of those endpoints, but the train is moving in both those directions, and we need to apply the brakes.

Schneier’s page about the book has lots of links to excerpts and reviews. Have a look, then get the book. You should read it.

Here in Canada, just this week we learn that the Communications Security Establishment continues to spy on people worldwide and see the Conservatives push Bill C-51. Schneier’s book helps here as everywhere else: what is happening, why it matters, and what to do.

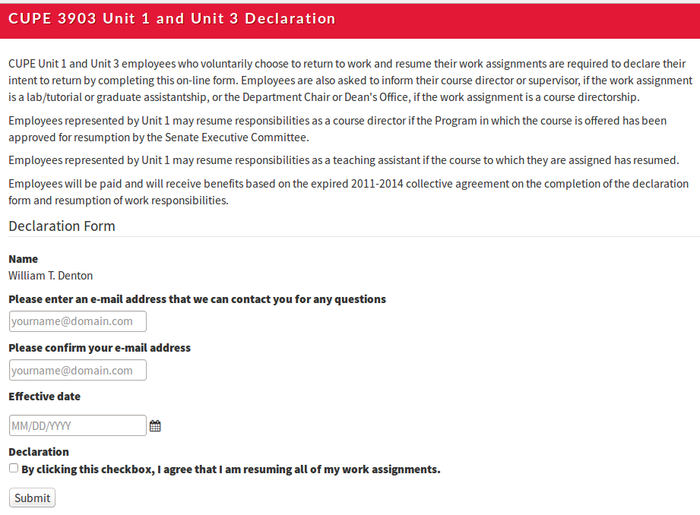

Click here to scab

The strike at York University, where I work, enters its fourth week tomorrow.

The university’s Labour Disruption Update site (they are always very careful to call it a “labour disruption”, not a strike) has some information for people in CUPE 3903 Units 1 (TAs) and 3 (GAs) who want to come back to work. If you follow through and log in with your York account, this is what you get.

It’s sad to see York University, home to decades of progressive thinking and social activism, doing this.

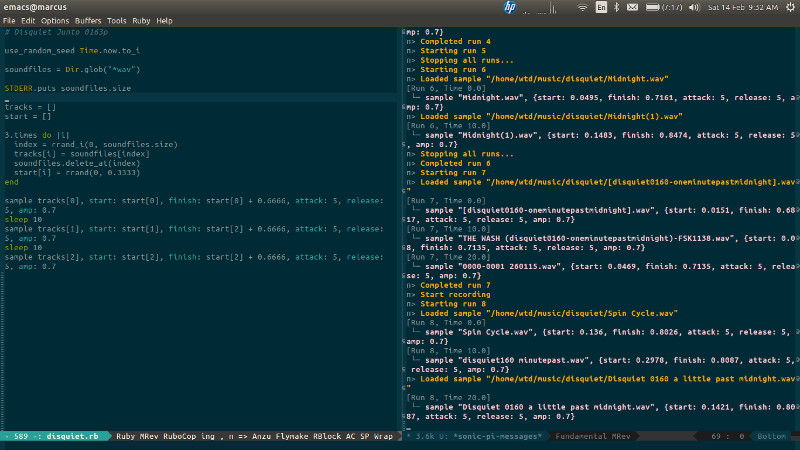

Disquiet Junto 0163

I follow Marc Weidenbaum’s collaborative musical project the Disquiet Junto to see what the projects are, and sometimes listen to the work people create. I’ve never contributed before, but the current project, Disquiet Junto Project 0163: Layering Minutes After Midnight, was something I could tackle easily with Sonic Pi, so I had a go.

The instructions for this project are:

Step 1: Revisit project #0160 from January 22, 2015, in which field recordings were made of the sound one minute past midnight:

Step 2: Locate segments that are especially quiet and meditative — and confirm that they are available for creative reuse. Many should have a Creative Commons license stating such, and if you’re not sure just check with the responsible Junto participant.

Step 3: Using segments from three different tracks from the January 22 project, create a new work of sound that layers the pre-existing material into something new, something nocturnal. Keep the length of your final piece to one minute

Step 4: Upload the finished track to the Disquiet Junto group on SoundCloud.

Step 5: Be sure to include link/mentions regarding the source tracks.

Step 6: Then listen to and comment on tracks uploaded by your fellow Disquiet Junto participants.

What I did was this. First, I downloaded all of the downloadable WAV files in the project. (Sonic Pi can only sample them and FLAC, and for some reason the FLAC file I got didn’t work.)

Next I wrote a script that would choose three different WAV files at random and for each one a random starting time within the first 20 seconds of the track. (Assuming all tracks are exactly 60 seconds, this means choosing a random number between 0 and 1/3, because for Sonic Pi the start of a sample is at 0 and the end is at 1.)

use_random_seed Time.now.to_i

soundfiles = Dir.glob("*wav")

STDERR.puts soundfiles.size

tracks = []

start = []

3.times do |i|

index = rrand_i(0, soundfiles.size)

tracks[i] = soundfiles[index]

soundfiles.delete_at(index)

start[i] = rrand(0, 0.3333)

end

sample tracks[0], start: start[0], finish: start[0] + 0.6666, attack: 5, release: 5, amp: 0.7

sleep 10

sample tracks[1], start: start[1], finish: start[2] + 0.6666, attack: 5, release: 5, amp: 0.7

sleep 10

sample tracks[2], start: start[2], finish: start[2] + 0.6666, attack: 5, release: 5, amp: 0.7

It plays the fragment of the first track, then 10 seconds later starts the fragment of the second track, then 10 seconds later starts the fragment of the third track. Since each is 40 seconds long, for 20 seconds all three are on top of each other, then the first ends, then the second, and for the last 10 seconds only the third track is playing. The attack and release settings mean each track takes 5 seconds to fade in and 5 seconds to fade out.

I was doing all this in Emacs if interested) in sonic-pi-mode. After some testing I ran M-x sonic-pi-start-recording, ran the script, then ran M-x sonic-pi-stop-recording and saved the file.

These are the three tracks it chose:

- Spin Cycle-disquiet160-oneminutepastmidnight by High Tunnels

- archway road midnight (disquiet160-oneminutepastmidnight) by Zedkah

- Can you hear the boredom? (Disquiet0160-Oneminutepastmidnight) by moduS ponY.

All have Creative Commons licenses, which I checked before going further.

The result was 72 seconds long (a few seconds were added while I ran the start/stop but I don’t see how that added up to 12) so I used Audacity to change the length to 60s without changing the pitch. I went back later to edit out the start/stop dead time but accidentally overwrote my original file, so I left it as is.

The result is “Waves Upon Waves” (embedded from SoundCloud):

Measure the Library Freedom

The winners of the Knight News Challenge: Libraries were announced a few days ago. I didn’t know about the Knight Foundation (those are the same Knights as in Knight Ridder (“Not to be confused with Knight Rider or Night Rider”)) but they’re giving out lots of money to lots of good projects. DocumentCloud got funded a few years ago, and the Internet Archive got $600,000 this round, and well deserved it is. I was struck by how two winners fit together: the Library Freedom Project, which got $244,700, and Measure the Future, which got $130,000.

The Library Freedom Project has this goal:

Providing librarians and their patrons with tools and information to better understand their digital rights by scaling a series of privacy workshops for librarians.

Measure the Future says:

Imagine having a Google-Analytics-style dashboard for your library building: number of visits, what patrons browsed, what parts of the library were busy during which parts of the day, and more. Measure the Future is going to make that happen by using simple and inexpensive sensors that can collect data about building usage that is now invisible. Making these invisible occurrences explicit will allow librarians to make strategic decisions that create more efficient and effective experiences for their patrons.

Our goal is to enable libraries and librarians to make the tools that measure the future of the library as physical space. We are going to build open tools using open hardware and open source software, and then provide open tutorials so that libraries everywhere can build the tools for themselves.

I like collecting and analyzing data, I like measuring things, I like small computers and embedded devices, even smart dust—it always comes back to Vernor Vinge, this time A Deepness In the Sky—but I must say I don’t like Google Analytics even though we use it at work. Any road up:

We will be producing open tutorials that outline both the open hardware and the open source software we will be using, so that any library anywhere will be able to purchase inexpensive parts, put them together, and use code that we provide to build their own sensor networks for their own buildings.

The people behind Measure the Future are all top in the field, but, cripes, it looks like they want to combine users, analytics, metrics, sensors, embedded devices, free software, open hardware and “library as place” into a well-intentioned ROI-demonstrating panopticon.

I’m not going to get all Michel Foucault you, but I recently read The Inspection House: An Impertinent Field Guide to Modern Surveillance by Tim Maly and Emily Horne:

The panopticon is the inflexion point and the culmination point of this new regime. It is the platonic ideal of the control the disciplinary society is trying to achieve. Operation of the panopticon does not require special training or expertise; anyone (including the children or servants of the director, as Bentham suggests) can provide the observation that will produce the necessary effects of anxiety and paranoia in the prisoner. The building itself allows power to be instrumentalized, redirecting it to the accomplishment of specific goals, and the institutional architecture provides the means to achieve that end.

Measure the Future has all the best intentions and will use safe methods, but still, it vibes hinky, this idea of putting sensors all over the library to measure where people walk and talk and, who knows, where body temperature goes up or which study rooms are the loudest … and then that would get correlated with borrowing or card swipes at the gate … and knowing that the spy agencies can hack into anything unless the most extreme security measures are taken and there’s never a moment’s lapse … well, it makes me hope they’ll be in close collaboration with the Library Freedom Project.

And maybe the Library Freedom Project can ask them why, when we’re trying to help users protect themselves as their own governments try to eliminate privacy forever, we’re planting sensors around our buildings because we now think that neverending monitoring of users will help us improve our services and show our worth to our funders.

Steve Reich phase pieces with Sonic Pi

The first two of the phase pieces Steve Reich made in the sixties, working with recorded sounds and tape loops, were It’s Gonna Rain (1965) and Come Out (1966), both of which are made of two loops of the same fragment of speech slowly going out of phase with each other and then coming back together as the two tape players run at slightly different speeds. I was curious to see if I could make phase pieces with Sonic Pi, and it turns out it takes little code to do it.

Here is the beginning of Reich’s notes on “It’s Gonna Rain (1965)” in Writings on Music, 1965–2000 (Oxford University Press, 2002):

Late in 1964, I recorded a tape in Union Square in San Francisco of a black preacher, Brother Walter, preaching about the Flood. I was extremely impressed with the melodic quality of his speech, which seemed to be on the verge of singing. Early in 1965, I began making tape loops of his voice, which made the musical quality of his speech emerge even more strongly. This is not to say that the meaning of his words on the loop, “it’s gonna rain,” were forgotten or obliterated. The incessant repetition intensified their meaning and their melody at one and the same time.

Later:

I discovered the phasing process by accident. I had two identical tape loops of Brother Walter saying “It’s gonna rain,” and I was playing with two inexpensive tape recorders—one jack of my stereo headphones plugged into machine A, the other into machine B. I had intended to make a specific relationship: “It’s gonna” on one loop against “rain” on the other. Instead, the two machines happened to be lined up in unison and one of them gradually started to get ahead of the other. The sensation I had in my head was that the sound moved over to my left ear, down to my left shoulder, down my left arm, down my leg, out across the floor to the left, and finally began to reverberate and shake and become the sound I was looking for—“It’s gonna/It’s gonna rain/rain”—and then it started going the other way and came back together in the center of my head. When I heard that, I realized it was more interesting than any one particular relationship, because it was the process (of gradually passing through all the canonic relationships) making an entire piece, and not just a moment in time.

The audio sample

First I needed a clip of speech to use. Something with an interesting rhythm, and something I had a connection with. I looked through recordings I had on my computer and found an interview my mother, Kady MacDonald Denton, had done in 2007 on CBC Radio One after winning the Elizabeth Mrazik-Cleaver Canadian Picture Book Award for Snow.

She said something I’ve never forgotten that made me look at illustrated books in a new way:

A picture book is a unique art form. It is the two languages, the visual and the spoken, put together. It’s sort of like a—almost like a frozen theatre in a way. You open the cover of the books, the curtain goes up, the drama ensues.

I noticed something in “theatre in a way,” a bit of rhythmic displacement: there’s a fast ONE-two-three one-two-THREE rhythm.

That’s the clip to use, I decided: the length is right (1.12 seconds), the four words are a little mysterious when isolated like that, and the rhythm ought to turn into something interesting.

Phase by fractions

The first way I made a phase piece was not with the method Reich used but with a process simpler to code: here one clip repeats over and over while the other starts progressively later in the clip each iteration, with the missing bit from the start added on at the end.

The start and finish parameters specify where to clip a sample: 0 is the beginning, 1 is the end, and ratio grows from 0 to 1. I loop n+1 times to make the loops play one last time in sync with each other.

full_quote = "~/music/sonicpi/theatre-in-a-way/frozen-theatre-full-quote.wav"

theatre_in_a_way = "~/music/sonicpi/theatre-in-a-way/frozen-theatre-theatre-in-a-way.wav"

length = sample_duration theatre_in_a_way

puts "Length: #{length}"

sample full_quote

sleep sample_duration full_quote

sleep 1

4.times do

sample theatre_in_a_way

sleep length + 0.3

end

# Moving ahead by fractions of a second

n = 100

(n+1).times do |t|

ratio = t.to_f/n # t is a Fixnum, but we need ratio to be a Float

# This one never changes

sample theatre_in_a_way, pan: -0.5

# This one progresses through

sample theatre_in_a_way, start: ratio, finish: 1, pan: 0.5

sleep length - length * ratio

sample theatre_in_a_way, start: 0, finish: ratio, pan: 0.5

sleep length*ratio

end

Phase by speed

The second method is to run one loop a tiny bit faster than the other and wait for it to eventually come back around and line up with the fixed loop. This is what Reich did, but here we achieve the effect with code, not analog tape players.

The rate parameter controls how fast a sample is played (< 1 is slower, > 1 is faster), and if n is how many times we want the fixed sample to loop then the faster sample will have length length - (length / n) and play at rate (1 - 1/n.to_f) (the number needs to be converted to a Float for this to work). It needs to loop n * length / phased_length) times to end up in sync with the steady loop. (Again I add 1 to play both clips in sync at the end as they did in the beginning.)

For example, if the sample is 1 second long and n = 100, then the phased sample would play at rate 0.99, be 0.99 seconds long, and play 101 times to end up, after 100 seconds (actually 99.99, but close enough) back in sync with the steady loop, which took 100 seconds to play 1 second of sound 100 times.

It took me a bit of figuring to realize I had to convert numbers to Float or Integer here and there to make it all work, which is why to_f and to_i are scattered around.

full_quote = "~/music/sonicpi/theatre-in-a-way/frozen-theatre-full-quote.wav"

theatre_in_a_way = "~/music/sonicpi/theatre-in-a-way/frozen-theatre-theatre-in-a-way.wav"

length = sample_duration theatre_in_a_way

puts "Length: #{length}"

sample full_quote

sleep sample_duration full_quote

sleep 1

4.times do

sample theatre_in_a_way

sleep length + 0.3

end

# Speed phasing

n = 100

phased_length = length - (length / n)

# Steady loop

in_thread do

(n+1).times do

sample theatre_in_a_way

sleep length

end

end

# Phasing loop

((n * length / phased_length) + 1).to_i.times do

sample theatre_in_a_way, rate: (1 - 1/n.to_f)

sleep phased_length

endThis is the result:

Set n to 800 and it takes over fifteen minutes to evolve. The voice gets lost and just sounds remain.

“Time for something new”

In notes for “Clapping Music (1972)” (which I also did on Sonic Pi), Reich said:

The gradual phase shifting process extremely useful from 1965 through 1971, but I do not have any thoughts of using it again. By late 1972, it was time for something new.

Setting up Sonic Pi on Ubuntu, with Emacs

It’s no trouble to get Sonic Pi going on a Raspberry Pi (Raspbian comes with it), and as I wrote about last week I had great fun with that. But my Raspberry Pi is slow, so it would often choke, and the interface is meant for kids so they can learn to make music and program, not for middle-aged librarians who love Emacs, so I wanted to get it running on my Ubuntu laptop. Here’s how I did it.

There’s nothing really new here, but it might save someone some time, because it involved getting JACK working, which is one of those things where you begin carefully documenting everything you do and an hour later you have thirty browser tabs open, three of them to the same mailing list archive showing a message from 2005, and you’ve edited some core system files but you’re sure you’ve forgotten one and don’t have a backup, and then everything works and you don’t want to touch it in case it breaks.

Linux and Unix users should go to the GitHub Sonic Pi repo and follow the generic Linux installation instructions, which is what I did. I run Ubuntu; I had some of the requirements already installed, but not all. Then:

cd /usr/local/src/

git clone git@github.com:samaaron/sonic-pi.git

cd sonic-pi/app/server/bin

./compile-extensions

cd ../../gui/qt

./rp-build-app

./rp-app-bin

The application compiled without any trouble, but it didn’t run because jackd wasn’t running. I had to get JACK going. The JACK FAQ helped.

sudo apt-get install qjackctl

qjackctl is a little GUI front end to control JACK. I installed it and ran it and got an error:

JACK is running in realtime mode, but you are not allowed to use realtime scheduling.

Please check your /etc/security/limits.conf for the following line

and correct/add it if necessary:

@audio - rtprio 99

After applying these changes, please re-login in order for them to take effect.

You don't appear to have a sane system configuration. It is very likely that you

encounter xruns. Please apply all the above mentioned changes and start jack again!

Editing that file isn’t the right way to do it, though. This is:

sudo apt-get install jackd2

sudo dpkg-reconfigure -p high jackd2

This made /etc/security/limits.d/audio.conf look so:

# Provided by the jackd package.

#

# Changes to this file will be preserved.

#

# If you want to enable/disable realtime permissions, run

#

# dpkg-reconfigure -p high jackd

@audio - rtprio 95

@audio - memlock unlimited

#@audio - nice -19

Then qjackctl gave me this error:

JACK is running in realtime mode, but you are not allowed to use realtime scheduling.

Your system has an audio group, but you are not a member of it.

Please add yourself to the audio group by executing (as root):

usermod -a -G audio (null)

After applying these changes, please re-login in order for them to take effect.

Replace “(null)” with your username. I ran:

usermod -a -G audio wtd

Logged out and back in and ran qjackctl again and got:

ACK compiled with System V SHM support.

cannot lock down memory for jackd (Cannot allocate memory)

loading driver ..

apparent rate = 44100

creating alsa driver ... hw:0|hw:0|1024|2|44100|0|0|nomon|swmeter|-|32bit

ALSA: Cannot open PCM device alsa_pcm for playback. Falling back to capture-only mode

cannot load driver module alsa

Here I searched online, looked at all kinds of questions and answers, made a cup of tea, tried again, gave up, tried again, then installed something that may not be necessary, but it was part of what I did so I’ll include it:

sudo apt-get install pulseaudio-module-jack

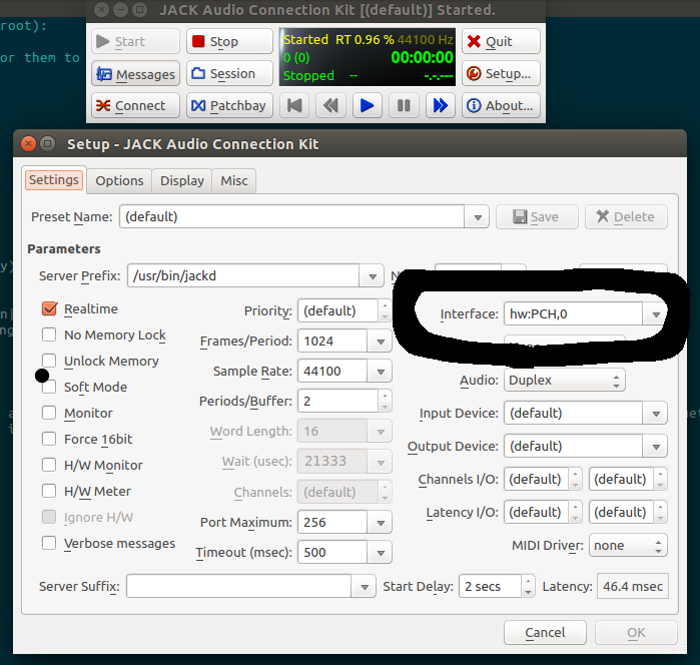

Then, thanks to some helpful answer somewhere, I got onto the real problem, which is about where the audio was going. I grew up in a world where home audio signals (not including the wireless) were transmitted on audio cables with RCA jacks. (Pondering all the cables I’ve used in my life, I think the RCA jack is the closest to perfection. It’s easy to identify, it has a pleasing symmetry and design, and there is no way to plug it in wrong.) Your cassette deck and turntable would each have one coming out and you’d plug them into your tuner and then everything just worked, because when you needed you’d turn a knob that meant “get the audio from here.” I have only the haziest idea of how audio on Linux really works, but at heart there seems to be something similar going on, because what made it work was telling JACK which audio thingie I wanted.

You can pull up that window by clicking on Settings in qjackctl. The Interface line said “Default,” but I changed it to “hw:PCH (HDA Intel PCH (hw: 1)”, whatever that means) and it worked. What’s in the screenshot is different, and it works too. I don’t know why. Don’t ask me. Just fiddle those options and maybe it will work for you too.

I hit Start and got JACK going, then back in the Sonic Pi source tree I ran ./rp-app-bin and it worked! Sound came out of my speakers! I plugged in my headphones and they worked. Huzzah!

sonic-pi.el

That was all well and good, but nothing is fully working until it can be run from Emacs. A thousand thanks go to sonic-pi.el!

I used the package manager (M-x list-packages) to install sonic-pi; I didn’t need to install dash and osc because I already had them for some reason. Then I added this to init.el:

;; Sonic Pi (https://github.com/repl-electric/sonic-pi.el)

(require 'sonic-pi)

(add-hook 'sonic-pi-mode-hook

(lambda ()

;; This setq can go here instead if you wish

(setq sonic-pi-path "/usr/local/src/sonic-pi/")

(define-key ruby-mode-map "\C-c\C-c" 'sonic-pi-send-buffer)))

That last line is a customization of my own: I wanted C-c C-c to do the right thing the way it does in Org mode: here, I want it to play the current buffer. A good key combination like that is good to reuse.

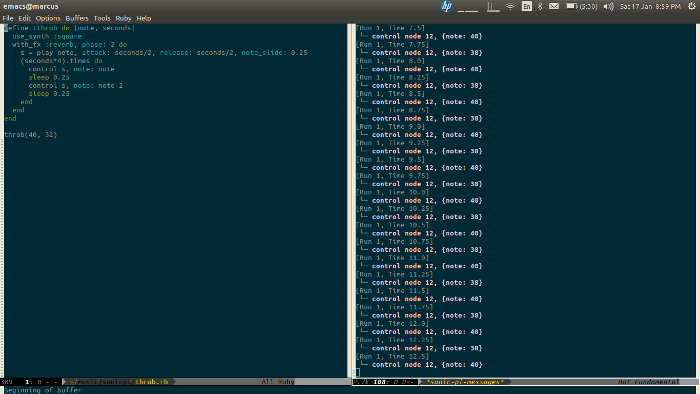

Then I could open up test.rb and try whatever I wanted. After a lot of fooling around I wrote this:

define :throb do |note, seconds|

use_synth :square

with_fx :reverb, phase: 2 do

s = play note, attack: seconds/2, release: seconds/2, note_slide: 0.25

(seconds*4).times do

control s, note: note

sleep 0.25

control s, note: note-2

sleep 0.25

end

end

end

throb(40, 32)

To get it connected, I ran M-x sonic-pi-mode then M-x sonic-pi-connect (it was already running, otherwise M-x sonic-pi-jack-in would do; sometimes M-x sonic-pi-restart is needed), then I hit C-c C-c … and a low uneasy throbbing sound comes out.

Amazing. Everything feels better when you can do it in Emacs. Especially coding music.

Clapping Music on Sonic Pi

A while ago I bought a Raspberry Pi, a very small and cheap computer, and I never did much with it. Then a few days ago I installed Sonic Pi on it and I’ve been having a lot of fun. (You don’t need to run it on a Pi, you can run it on Linux, Mac OS X or Windows, but I’m running it on my Pi and displaying it on my Ubuntu laptop.)

Sonic Pi is a friendly and easy-to-use GUI front end that puts Ruby on top of SuperCollider, “a programming language for real time audio synthesis and algorithmic composition.” SuperCollider is a bit daunting, but Sonic Pi makes it pretty easy to write programs that make music.

I’ve written before about “Clapping Music” by Steve Reich, who I count as one of my favourite composers: I enjoy his music enormously and listen to it every week. “Clapping Music” is written for two performers who begin by clapping out the same 12-beat rhythm eight times, then go out of phase: the first performer keeps clapping the same rhythm, but the second one claps a variation where the first beat is moved to the end of the 12 beats, so the second becomes first. That phasing keeps on until it wraps around on the 13 repetition and they are back in phase.

Here’s one animated version showing how the patterns shift:

And here’s another:

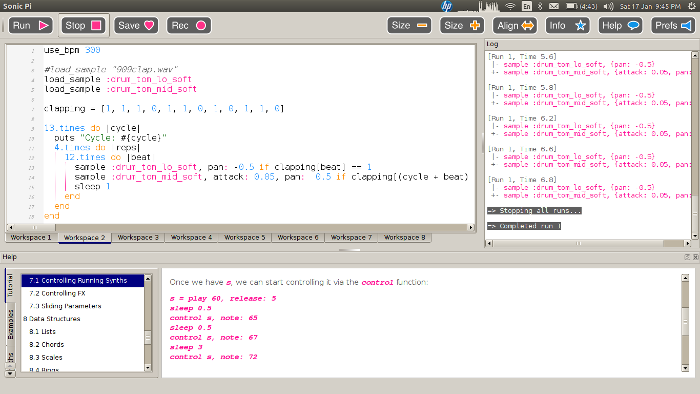

Here’s the code to have your Pi perform a rather mechanical version of the piece. The clapping array defines when a clap should be made. There are 13 cycles that run through the clapping array 4 times each. The first time through cycle is 0, and the two tom sounds are the same. The second time through cycle is 1, so the second tom is playing one beat ahead. Third time through cycle is 2, so the second tom is two beats ahead. It’s modulo 12 so it can wrap around: if the second tom is on the fifth cycle and ten beats in, there’s no 15th beat, so it needs to play the third beat.

use_bpm 300

load_sample :drum_tom_lo_soft

load_sample :drum_tom_mid_soft

clapping = [1, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 0]

13.times do |cycle|

puts "Cycle: #{cycle}"

4.times do |reps|

12.times do |beat|

sample :drum_tom_lo_soft, pan: -0.5 if clapping[beat] == 1

sample :drum_tom_mid_soft, attack: 0.05, pan: 0.5 if clapping[(cycle + beat) % 12] == 1

sleep 1

end

end

endIf you’re running Sonic Pi, just paste that in and it will work. It sounds like this (Ogg format):

It only does four repetitions of each cycle because my Pi is old and not very powerful and for some reason eight made it go wonky. It’s not perfect even now, but the mistakes are minimal. I think a more recent and more powerful Pi would be all right, as would running Sonic Pi on a laptop or desktop.

It’s lacking all the excitement of a performance by real humans (some of which could be faked with a bit of randomization and effects), but it’s very cool to be able to do this. Algorithmic music turned into code!

NO AD

Re+Public has made NO AD, an augmented reality smartphone app that replaces ads in the New York City subway system with art.

They say:

New York is a city of commuters. 5.5 million riders move through its expansive subway system on an average weekday. Advertisers take advantage of this huge, captive audience by bombarding everyone with commercial messages.

Over the years, artists have attempted to take back this public space and our attention, but the system remains full of ads. This is why we created NO AD, a mobile app available now for FREE on iOS and Android. NO AD uses augmented reality technology to replace ads with artwork in realtime through your mobile device.

I wrote this in “Lunch with Zoia,” a short short story at the start of Libraries and Archives Augmenting the World:

Zoia was meeting George at a pub a ten-minute walk from her university that was also easy to get to from George’s public library, especially when the subways were working. She enjoyed the view as she left the university: she ran Adblock Lens, which she’d customized so it disabled every possible ad on campus as well as in the bus stops and on the billboards on the city streets. Sometimes she replaced them with live content, but today she just had the blanked spaces colored softly to blend in with what was around them. No ads, just a lot of beige and brown, slightly glitchy.

It’s not my original idea, but it’s nice to see it become real.

(In my browsers I use Adblock Plus, by the way, and I configure it to block the “unobtrusive” ads too. Wonderful. When I see people using the web without an ad blocker the experience revolts me.)

Miskatonic University Press

Miskatonic University Press