Here’s the fourth (first, second, third) and penultimate post about the Augmented World Expo 2014. This is about the glasses and headsets—the wearables, as everyone there called them. This was all new to me and I was very happy to finally have a chance to try on all the new hardware that’s coming out.

What’s going on with the glasses is incredible. The technology is very advanced and it’s getting better every year. On the other hand, it is all completely and utterly distinguishable from magic. Eventually it won’t be, but from what I saw on the exhibit floor, no normal person is ever going to wear any glasses in day-to-day life. Eventually people will be wearing something (I hope contact lenses) but for now, no.

I include Google Glass with that, because anyone wearing them now isn’t normal. Rob Manson let me try on his Glass. My reaction: “That’s it?” “That’s it,” he said. (Interesting to note that there seemed to be no corporate Google presence at the conference at all. Lots of people wearing Glass, though. I hope they won’t think me rude if I say that four out of five Glass wearers, possibly nine out of ten (Rob not being one of those), look like smug bastards. They probably aren’t smug bastards, they just look it.) Now, I only wore the thing for a couple of minutes, but given all the hype and the fancy videos, I was expecting a lot more.

I was also expecting a lot more from Epson’s Moverio BT-200s and Meta’s 01 glasses.

Metaio has their system working on the BT-200s and I tried that out. In the exhibit hall (nice exhibit, very friendly and knowledgeable staff, lots of visitors) they had an engine up on a table and an AR maintenance app running on a tablet and the BT-200s. On the tablet the app looked very good: from all angles it recognized the parts of the engine and could highlight pieces (I assume they’d loaded in the CAD model) and aim arrows at the right part. A fine example of industrial applications, which a lot of AR work seems to be right now. I was surprised by the view in the glasses and how, well, crude it is. The cameras in the glasses were seeing what was in front of me and displaying that, with augments, on a sort of low-quality semitransparent video display floating a meter in front of my face. The resolution is 960 x 540, and Epson says the “perceived screen is equivalent to viewing an 80-inch image from 16.4 feet or 5 meters away.” I can’t see specs online that say what the field of view is as an angle, but it was something like 40%. Your fist at arm’s length is 10°, so image a rather grainy video four fists wide and two fists high, at arm’s length. The engine is shown with the augments, but it’s not exactly lined up with the real engine, so you’re seeing both at the same time. Hard to visualize this, I know.

Meta had a VIP party on the Wednesday night at the company mansion.

The glasses are built at a rented $US15 million ($16.7 million), 80,937 square metre estate in Portola Valley, California, complete with pool, tennis court and 30-staff mansion. — Sydney Morning Herald

He now lives and works with 25 employees in a Los Altos mansion overlooking Silicon Valley and has four other properties where employees sleep at night.

“We all live together, work together, eat together,” he said. “At certain key points of the year we have mattresses lined all around the living room.” — CNN

On the exhibit floor they had a small space off in the corner, masked with black curtains and identifiable by the META logo and the line-up of people waiting to get in to try the glasses. I lined up, waited, chatted a bit with people near me, and eventually I was ushered into their chamber of mystery and a friendly fellow put the glasses (really a headset right now) on my head and ran me through the demo.

The field of view again was surprisingly small. There was no fancy animation going on, it was just outlined shapes and glowing orbs (if I recall correctly). I’d reach out to grab and move objects, or look to shoot them down, that sort of thing. As a piece of technology under construction, it was impressive, but in no way did it match the hype I’ve seen.

Now, my experience lasted about three minutes, and I’m no engineer or cyborg, and the tech will be much further along when it goes on sale next year, but I admit I was expecting to see something close to the videos they make. That video shows the Pro version, selling for $3,650 USD, so I expect it will be much fancier, but still. They say the field of view is 15 times that of Google Glass … if that’s five times wider and three times higher, it’s still not much, because Glass’s display seems very small. The demo reels must show the AR view filling up the entire screen whereas in real life it would only be a small part of your overall view.

That said, the Meta glasses do show a full 3d immersive environment (albeit a small one). They’re not just showing a video floating in midair. Even if the Meta glasses start off working with just a sort of small box in front of you, you’re really grabbing and moving virtual objects in the air in front of you. That is impressive. And it will only get better, and fast.

I had a brief visit to the Lumus Optical booth, where I tried on a very nice pair of glasses (DK40, I think) with a rich heads-up display. I’m not sure how gaffed it was as a demo, but in my right eye I was seeing a flight controller’s view of airplanes nearby. I could look all around, 360°, up and down, and see little plane icons out there. If I looked at one for a moment it zoomed in on it and popped up a window with all the information about it: airline, flight number, destination, airplane model, etc. The two people at the booth were busy with other people so I wasn’t able to ask them any questions. In my brief test, I liked these glasses: they were trying to do less than the others, but they succeeded better. I’d like to try an astronomy app with a display like this.

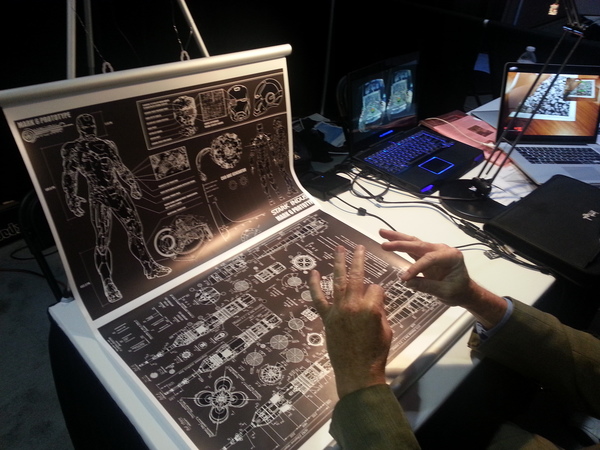

Mark Billinghurst’s HitLabNZ had a booth and they were showing their work. They’d taken two depth-sensing cameras and stuck them onto the front of an Oculus Rift: the Rift gives great video and rendered virtual objects and the cameras locate the hands and things in the real world. Combined you get a nice system. I tried it out and it was also impressive, but it would have taken me a while longer to get a decent sense of where things are in the virtual space, I was never reaching out far enough. This lab is doing a lot of interesting research and advancing the field.

I also a similar hack, an Oculus Rift with a Leap Motion depth sensor duct-taped to the front of it. Leap Motion has just released a new version of the software (works on the same hardware) and the new skeletal tracking is very impressive. I don’t have any pictures of that and I don’t see any demo videos online, but it worked very well. If you fold your hand up into a fist, it knows.

Here’s a demo video. Imagine that instead of seeing all that on a screen in front of you, you’re wearing a headset (an Oculus) and it’s all floating around in front of you, depth and all, and you can look in any direction. It’s impressive.

Miskatonic University Press

Miskatonic University Press