Wednesday 28 May 2014 was the first day of Augmented World Expo 2014, and here are my notes on that, following on the AR standards preconference the day before, and skipping over the morning’s keynote where Robert Scoble was utterly unconcerned about privacy. The AugmentedRealityorg channel on YouTube has all the videos. (Congratulations again to them for making all the talks available for free, and so quickly.)

There was a birds of a feather session at 8 am. I put out a sign at a table: LIBRARIES. No one sat down until three fellows came along and needed a place to sit because all the other tables were full. They worked for DARPA and were modifying Android devices so soldiers could use and wear them in the field. It was the first time anyone ever said MilSpec to me. “This isn’t like my usual library conferences,” I thought.

At nine things got started when Ori Inbar (@comogard) kicked things off with an enthusiastic welcome. He’s done years of hard work on this event and AR, and he was very warmly received. He set out five trends in AR right now:

- from gimmick to value

- from mobile to wearable (10 companies bringing glasses to market this year)

- from consumer to enterprise

- from GPS to 3Difying the world

- the new new interface

Then co-organizer Tish Shute (@tishshute) followed up. She mentioned “strong AR” (a combination of full eyewear and gestures) and wireless body area networks (new to me). Then there was Scoble and then Nir Eyal, who gave a confident AR-free talk about how to apply behaviourism to marketing to make more money.

But then it was Ronald Azuma! It was he who in 1997 (!) in A Survey of Augmented Reality set out the three characteristics a system must have to be augmented reality, and this definition still holds:

- Combines real and virtual;

- Interactive in real time;

- Registered in 3-D.

He’s working at Intel now, and gave a talk called “Leviathan: Inspiring New Forms of Storytelling via Augmented Reality.” The Leviathan is the Scott Westerfeld novel, and Westerfeld wrote about the Intel Leviathan Project.

He listed four approaches to memorable and involving AR:

- take content that is already compelling and bring it into our world (as they did)

- reinforcing: like 110 Stories, Westwood Experience

- reskinning: take what reality offers and twist it, convert world around you to fictional environment, e.g. belief circles in Rainbows End

- remembering: much of the world is mundane, but what happened there is not; some memories are important to everyone, some to just you

A few talks later was Mark Billinghurst from the Human Interface Technology Lab New Zealand. He said he wanted to make Iron Man real, with a contact lens display, free-space hand and body tracking and speech/gesture recognition. (Iron Man came up several times at the conference, more than Rainbows End.) He talked about research they’ve done on multimodal interfaces, which work with both voice and gesture. Gesturing is best for some tasks, but combined with voice, the two complement each other well. A usability study of multimodal input in an augmented reality environment (Virtual Reality 17:4, November 2013) has it all, I think.

In the afternoon there was a great session on art and museums. The academics (like Azuma and Billinghurst, and Ishii the next day) and the artists were doing the most interesting and exciting work I saw at the conference. Most of the vendors just played videos from their web sites. The artists and academics are pushing things, trying new things, seeing what breaks, and always doing it with a critical eye and thinking about what it means in the world.

First was B.C. Biermann, who asked, “How can citizens make incursions into public spaces?” He posed an AR hypothesis: “Can emerging technologies, and AR in particular, democratize access to public, typically urban spaces, form a new mode of communication, and give a voice to private citizens and artists?” He’s done some really interesting work, some as part of Re+Public.

He ended with this question, which made me think about how art has changed, or perhaps how it hasn’t:

How can we use this technology for the benefit of the collective as opposed to an individualistic, material-seeking profit-seeking enterprise? Not that that’s necessarily a bad thing, but I think that technology can also be used in both verticals, a beneficial collective vertical and also maybe one that is monetizable.

Then Patrick Lichty spoke on “ARt: Crossing Boundaries.” He ran through a “laundry list of things that are on my scholarly radar,” covering a lot of ground quickly. There’s much good stuff to be seen here, too much to pull out and list, but watch it for a solid fifteen-minute overview of AR and art.

Nicholas Henchoz from EPFL-ECAL in Lausanne was next, with “Is AR the Next Media?”

Some (fragmentary?) quotes I wrote down:

- “Visual language and narrative principles for digital content. Relation between the real and the virtual world.”

- “Continuum in the perception of materiality. Direct link with the physical world. Credibility of an animated object. Real-time perception.”

- “The space around the object is also part of the experience.”

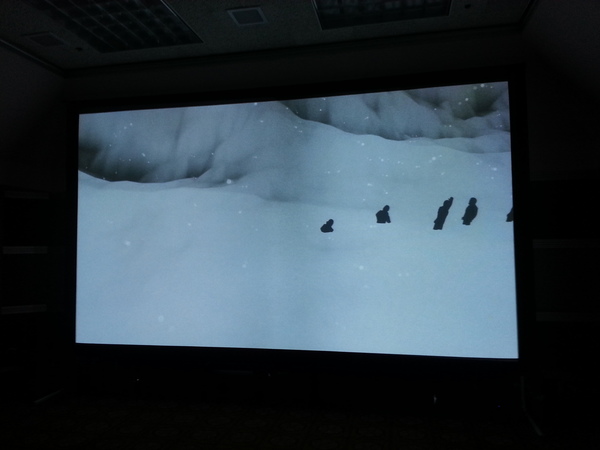

Henchoz and his team had a room set up with their installation AR art! It ran all through the conference. One piece was Ghosts, by Thomas Eberwein & Tim Gfrerer. It has a huge screen showing snowy mountains. When you stand in front of it and wave your arms, it sees you and puts your silhouette into the scene, buried up to your waist in the snow, in a long line with all the other people who had done the same. (In the past hour or two, or since the system restarted—I asked if it stored everyone in there, but it doesn’t.)

They also had Tatooar set up. There was a stack of temporary tattoos, and if you put one on and look at it on the monitor, it got augmented. Here’s my real arm and my augmented arm. It doesn’t show in the still photo, but the auggie was animated and had an eye that opened up.

Finally there was Giovanni Landi, who talked about three projects he’s done with AR and cultural heritage, with AR views of Masaccio’s Holy Trinity fresco (particularly notable for its use of perspective), the Spanish Chapel at the Basilica of Santa Maria Novella in Florence, and also panoramic multispectral imagining of early 1900s Sicilian devotional art. I took very few notes here because his talk was so interesting.

A later session involved Matthew Ramirez, a lead on AR at Mimas in Manchester, where they use AR in higher education. Their showcase has videos of all their best work, and it’s worth a look.

He listed some lessons learned:

- use of AR should be more contextual and linked to the object

- best used in short bite-sized learning chunks

- must deliver unique learning values different from online support

- user should become less conscious of the technology and more engaged with the learning/object

- users learn in different ways and AR may not be appropriate for all students

I chatted with him later and he also said they’ve had much more success working with faculty and courses with lots of students and where the tools and techniques can be adapted for other courses. The small intense boutique projects don’t scale and don’t attract wide interest.

The last one from the day I’ll mention was a talk by Seth Hunter, formerly of the MIT Media Lab and now at Intel, “A New Shared Medium: Designing Social Experiences at a Distance.” He talked about WaaZam, which merges people in different places together into one shared virtual space (a 2.5D world, he called it). Skype lets people talk to each other over video, but everyone’s in their own little box. Little kids find that hard to deal with—he mentioned parents who are away from their children, and how much more engaging and involving and fun it is for the kids when everyone is in the same online space.

“The new shared medium has already been forecasted by artists and hackers,” he said. This was a nice talk about good work for real people.

Hunter was the only person I saw at AWE 2014 to show a GitHub URL! Here it is: https://github.com/arturoc/ofxGstRTP. All the code they’re developing is open and available.

Miskatonic University Press

Miskatonic University Press